Advertisement

Personal perspective Free access | 10.1172/JCI39424

How to write an effective referee report

Ushma S. Neill, Executive Editor, The Journal of Clinical Investigation

Find articles by Neill, U. in: PubMed | Google Scholar

Published May 1, 2009 - More info

J Clin Invest. 2009;119(5):1058–1060. https://doi.org/10.1172/JCI39424.

© 2009 The American Society for Clinical Investigation

-

Abstract

Most scientists learn how to review papers by being thrown into the deep end of the pool: here’s a paper, write a review. Perhaps this is not the best way to keep an enterprise afloat. But what should be included in a review? What should the tone of a review be? Here I want to outline the specifics of what we at the JCI are looking for in a referee report.

For the editorial process to be successful, a few things are paramount: a well-written and novel manuscript, an informed and unbiased editor, and constructive comments from peer reviewers. We’ve already published pieces on how to write a scientific masterpiece (1) and what to expect from the editors (2, 3), but so far, we haven’t paid as much attention to another important group: referees. In addition to the myriad tips listed by the Editor in Chief (4), here are a few other things to keep in mind.

-

What is the point of a review?

Peer review should help to improve a paper that is already scientifically sound. The editors have a rule that if a manuscript is sent for external review, then we feel that it could ultimately, if appropriately revised, appear in the journal. The key thing we are looking for is comment on whether the experiments are well designed, executed, and controlled and can justify the conclusions drawn. Remember that there is a distinction between reviewing the paper and rewriting it for the authors. In other words, remember that this is the author’s paper.

-

Structure and function of a good review

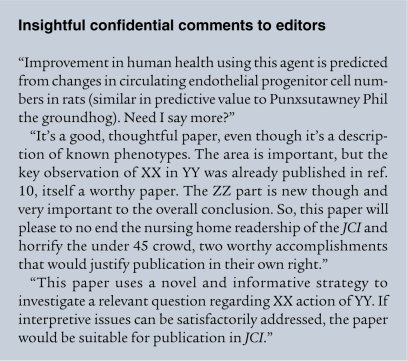

We use a form that requires referees to separate their comments into two fields: confidential comments to the editors and comments to be transmitted to the authors. Feel free to speak plainly and passionately, if you wish, in the confidential comments field (see Insightful confidential comments to editors). Your comments need not recapitulate exactly what you have written for the authors. It is in the confidential comments field that you should place your views on whether the manuscript is appropriate for the JCI; statements of this nature should not be transmitted to authors. This is because it is not fair to the authors or to the editors to give conflicting feedback, for example, if you tell the authors that their paper should be acceptable to the JCI and use language like “potentially interesting and important” but then disparage the conclusions in the confidential comments.

Summarize the science. In the comments for authors, start with a few sentences of introduction that outline what you think was the authors’ hypothesis, their main results, and the conclusions drawn. This is important because it shows us what you think were the major advances as opposed to what the authors might argue are the major conclusions. Be specific about what you think the study adds or changes in terms of understanding the pathway or disease under investigation and/or where this leaves us in terms of a conceptual advance. This allows the editors and authors to determine how an expert in the field viewed the paper. This first paragraph need not be long and is best finished with whether the conclusions are supported by the data and whether major or minor changes are required. An exemplary introductory paragraph taken from the review of a recently published paper (5) is shown here (see What we are looking for in an opening paragraph).

In the rest of the review, you should break your comments into two bulleted or numbered sections: major comments and minor comments. Speak plainly, but dispassionately — in the same tone as you would in writing a manuscript. There is no need to use all caps, exclamation points, or complicated analogies. The goal is for the entire review to be a maximum of 750 words. Using 20 words when you can use one will impress no one, and shorter reviews (within reason) are usually more helpful and constructive than longer ones. Further to this point, it is easy to tell when a review has been handed to a junior associate — the review is often overly long and lacks a big picture view of the hypothesis and its implications. If you agreed to write the review, it is fine to review it with someone else in your lab — in fact, the editors realize that this is an important part of mentorship. But declare that you had a co-reviewer in your confidential comments, make sure that the final report has been edited as needed by you, and remember that you have assumed ultimate responsibility for its contents.

Determine any deficiencies. The key is to evaluate whether the experiments adequately address the hypothesis and support the conclusions. Keep it simple. Manuscripts generally propose a hypothesis and then test this experimentally. The results constitute an argument, usually in support of, although sometimes refuting, a hypothesis. The key to any review is to understand what is being asked? Do the experiments (and approach) adequately test the hypothesis? Do the results justify the conclusions or model? Are the studies convincing? If yes, say so. If no, state as clearly as possible what aspects are not convincing and outline experimental approaches that might be useful to address the question.

You need to focus on asking only for experiments that would bolster the foundation of the conclusions or add key mechanistic insight. There is no point in sending the authors on a fishing expedition to add new data that might be nice but are essentially frippery. When you ask for a specific experimental addition, justify the need for the request: “Since the relevance of the in vitro cell model for studying interactions between XX cells and YY is questionable, the authors would need to repeat the key experiments in the ZZ in vivo model.” If the timeline for such a request is long and the experiment is key, then rejection should be recommended. You have to determine whether the experiments requested are justifiable in terms of the overall conclusions. It is unlikely that we would encourage resubmission if the referees ask for new animal lines, experiments that take more than a year, or synthesis of completely new reagents.

There are also inherent dangers to asking for a specific experiment and outlining what you want to see from that experiment. Altogether too often, we have seen data added in response to such requests that are too good to be true. Try to phrase your criticisms in such a way as to ask for an experiment, not to predict what you want to see from said experiment (see How to properly phrase an experimental request).

Minor comments. Within the minor comments section of the review, most referees include mention of typographical mistakes and request minor changes to the text and figures, but you can relegate some of the experimental requests to this section as well, if you think that they are not crucial to the overall conclusions. Most authors will still respond to these comments. You should obviously correct any factually incorrect statements, but offer — don’t demand — suggestions about interpretation of data.

Shifting goalposts. When reviewing a revision, don’t come up with completely new experiments for the authors to do that weren’t mentioned in the first round of review. The caveat to this is that it is appropriate to challenge any new data that do not support the central hypothesis. While most authors will be responsive to the critiques, not all are, and we encourage referees to evaluate this in a re-review. Authors get one shot to revise, and if they have not provided a robust response, the manuscript should not be given a free pass.

In some cases, authors will do the experiments, but the data don’t sustain the hypothesis. Most will still add the data and send the paper back. As a referee, you need to let us know when the new data don’t make the paper better. In a rare example, an author wrote to us after we encouraged resubmission of his manuscript to say he wanted to withdraw the manuscript from further consideration, since his new data couldn’t be shoehorned into supporting the original conclusions (see Letter from an author).

In a similar vein, we want to get away from the practice of authors always holding a little something back from their initial submission so that they can appear responsive to the referees. It is of course understandable if new data are added, since the work was ongoing at the time of submission. We would prefer to have a complete story at the time of initial submission that we can accept pending minor revisions than have a story with flaws that comes back a week later with all the experiments asked for added quickly.

Rankings and recommendations. Consider carefully your overall recommendation. Given that we accept less than 10% of the papers we receive, we need to get a sense from our referees of whether the paper falls in this top tier, or whether, even if revised, it would never reach this priority. By asking for a rating (both a category, accept/accept with revision/reject, and a ranking, top 10%/top 25%/top 50%), we want to know whether the paper is interesting enough to publish even if it is faultless scientifically — or, what would make the paper good enough, even if all i’s are not dotted but the concept is intriguing and scientifically important. In many ways, this is the most important question you have to address and where the editorial board depends on you the most.

-

Déjà vu?

Should you agree to review a manuscript if you have previously reviewed it for another journal? Is this a case of double jeopardy? Our position is that you should declare this in response to the invitation and let the editors decide. In some cases, if the previous review was for a journal like Science, it is possible that the manuscript is more appropriate for the JCI and that the points you previously made would be valid again. In this case, we will likely want your opinion but would ask you to carefully look at the current copy to see if there were positive updates to the manuscript. You should not just provide the authors and us with a carbon copy of the earlier review. In other cases, we may decline the re-review to seek alternative views on the manuscript.

Speaking of prejudice, it is worth mentioning that we do have a policy on referee conflicts of interest. Specifically, referees should recuse themselves as reviewers if they collaborate with the authors or if there is a material conflict — financial or otherwise. We ask that referees inform the editors of any potential conflicts that might be perceived as relevant as early as possible following invitation to participate in the review, and we will determine how to proceed. Disclosing a potential conflict does not invalidate the comments of a referee, it simply provides the editors with additional information relevant to the review. The editors consider known conflicts when choosing referees, but we cannot be aware of all relationships, financial or otherwise, and therefore rely on referees to be transparent on this issue (3).

-

The editors’ prerogative

Different journals handle interpretation of referee reports in different ways, given that some are run by professional editors and others are like the JCI, whose editorial board is comprised largely of practicing scientists. We can only comment on the way that things are at the JCI and how we discuss papers and our own emphasis on what is seen as important.

At the JCI, the editors truly don’t add any weight to whether a particular manuscript could potentially be highly cited or newsworthy, but simply consider whether it is medically relevant, novel, and important for our broad readership. Therefore, we would ask you not to comment on citation potential or newsworthiness within your review. Also note that the board’s decision does not rest on a referee majority: one well-worded, persuasive review can overrule others that are not substantive or constructive.

The editors collectively serve as the last referee — the ultimate arbiters who have to be convinced of the merits of the study — this is where the authors’ cover letter and the referees’ first paragraphs/confidential comments are of great help. The editor must be in a position to make decisions, overrule the referees when their demands are unreasonable, and be able to edit comments that are mean for the sake of being mean. It is humorous to note that the harshest reviews often come from referees suggested by the authors.

The name game. We also try not to pay any attention to who the authors are. Obviously we don’t live in a vacuum, but no JCI editor comments on the merits of the investigator in the course of discussion of a manuscript. Much has been said about how the peer review system has flaws and would be best done in a blinded fashion — that the referees should have no idea who the authors are. We are unsure of whether the referees are influenced by the authors’ names and whether they are softer on high profile names than they should be, but this is exactly where an editorial board’s role is key — it would be impossible for a particular editor to pass a mediocre manuscript from a big name lab from their own field past a group of editors who might otherwise be unfamiliar with that person.

Let the judges be judged. Each review we receive is given a grade that reflects the quality and constructive nature of the review, and over time, referees get an averaged score. As we try to use experienced reviewers who are familiar with the journal, we’re able to see at a glance who has given us good advice in the past and those who provided biased, unhelpful advice. Happily, the large portion of reviews we receive are constructive. A journal like the JCI can only exist with the support and efforts of peer reviewers who spend hours reading manuscripts and delivering their verdicts, and we thank you for your efforts on our behalf.

-

Acknowledgments

I am thankful to many people who assisted in discussion of these ideas, including Brooke Grindlinger, Mitch Lazar, Domenico Accili, Anthony Ferrante, Bob Farese Jr., Mike Schwartz, Don Ganem, Larry Turka, Mark Kahn, Gary Koretzky, and Morris Birnbaum. I would also like to thank those listed in the masthead as Consulting Editors for providing the JCI with consistently high quality, helpful reviews.

-

Footnotes

Reference information: J. Clin. Invest.119:1058–1060 (2009). doi:10.1172/JCI39424

-

References

- Neill, U.S. 2007. How to write a scientific masterpiece. J. Clin. Invest. 117:3599-3602.

- Turka, L.A. 2007. Evolution, not revolution. J. Clin. Invest. 117:504-505.

- Neill, U.S., Thompson, C.B., Feldmann, M., Kelley, W.N. 2006. A new JCI conflict-of-interest policy. J. Clin. Invest. 117:506-508. View this article via: PubMed Google Scholar

- Turka, L.A. 2009. After further review. J. Clin. Invest. 119:1057.

.

View this article via: JCI Google Scholar

- Qatanani, M., et al. 2009. Macrophage-derived human resistin exacerbates adipose tissue inflammation and insulin resistance in mice. J. Clin. Invest. 119:531-539.

-

Version history

- Version 1 (May 1, 2009): No description

Copyright © 2025 American Society for Clinical Investigation

ISSN: 0021-9738 (print), 1558-8238 (online)